HelloWorld.R

print("Hello world!")Gaëlle Lefort

Alyssa Imbert

Julien Henry

Nathalie Vialaneix

January 18, 2024

DO NOT run treatments on frontal servers, always use sbatch or srun.

Before contacting the support, READ THE FAQ: http://bioinfo.genotoul.fr/index.php/faq/

This tutorial aims at describing how to run R scripts and compile RMarkdown files on the Toulouse Bioinformatics cluster1. To do so, you need to have an account (ask for an account on this page http://bioinfo.genotoul.fr/index.php/ask-for/create-an-account/). You can then connect to the cluster using the ssh command on linux and Mac OS and using Mobaxterm2 on Windows. Similarly, you can copy files between the cluster and your computer using the scp command on linux and Mac OS and using OpenSSH3 on Windows. The login address is genobioinfo.toulouse.inrae.fr. Once you are connected, you have two solutions to run a script: running it in batch mode or starting an interactive session. The script must never be run on the first server you connect to. Also, be careful that the programs that you can use from the cluster are not available until you have loaded the corresponding module. How to manage modules is explained in Section 1.

All programs are made available by loading the corresponding modules. These are the main useful commands to work with modules:

module avail: list all available modules

search_module [TEXT]: find a module with keyword

module load [MODULE_NAME]: to load a module (for instance to load R, module load statistics/R/4.3.0). This command is either used directly (in interactive mode) or included in the file that is used to run your R script in batch mode (see below)

module purge: purge all previous loaded modules

To launch an R script on the slurm cluster:

Write an R script:

Write a bash script:

Launch the script with the sbatch command:

The scripts myscript.sh and HelloWorld.R are supposed to be located in the same directory from which the sbatch command is launched. For Rmd files (see Section 7), be careful that you cannot compile a document if the Rmd file is not in a writable directory.

Jobs can be launched with customized options (more memory, for instance). There are two ways to handle sbatch options:

[RECOMMENDED] at the beginning of the bash script with lines of the form: #SBATCH [OPTION] [VALUE]

in the sbatch command: sbatch [OPTION] [VALUE] [OPTION] [VALUE] […] myscript.sh

Many options are available. To see all options use sbatch –help. Useful options:

-J, –job-name=jobname: name of job

-e, –error=err: file for batch script’s standard error

-o, –output=out: file for batch script’s standard output

–mail-type=BEGIN,END,FAIL: send an email at the beginning, end or fail of the script (default email is your user email and can be changed with –mail-user=truc@bidule.fr, to use with care)

-t, –time=HH:MM:SS: time limit (default to 04:00:00)

–mem=XG: to change memory reservation (default to 4G)

-c, –cpus-per-task=ncpus: number of cpus required per task (default to 1)

–mem-per-cpu=XG: maximum amount of real memory per allocated cpu required by the job

After a job has been launched, you can monitor it with squeue -u [USERNAME] or squeue -j [JOB_ID] and also cancel it with scancel [JOB_ID].

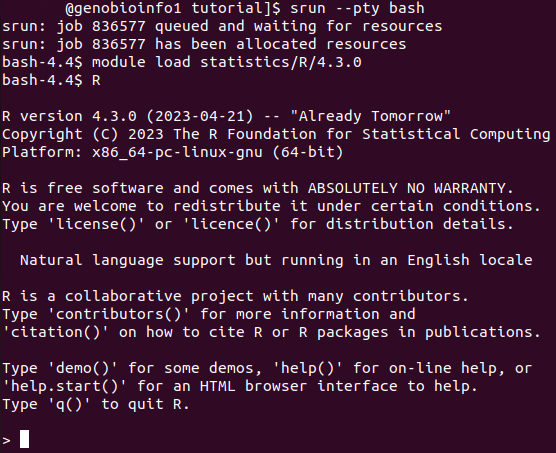

To use R in a console mode, use srun –pty bash to be connected to a node. Then, module load statistics/R/4.3.0 (for the latest R version) and R to launch R.

srun can be run with the same options than sbatch (cpu and memory reservations) (see Section 2.1).

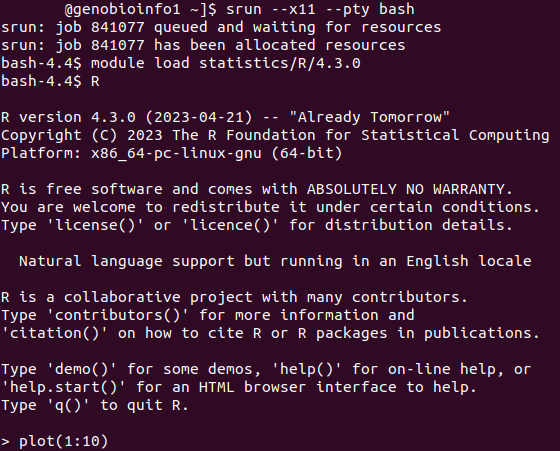

X11 sessions are useful to directly display plots in an interactive session. Prior their use, generate an ssh key with ssh-keygen and add it in authorized keys file with: cat .ssh/id_rsa.pub >> .ssh/authorized keys

The interactive session is then launched by:

To use R with a parallel environment, the -c (or –cpus-per-task) option for the sbatch and srun is needed. In the R script, the number of cores must be set to the SAME value.

Several packages exist to use parallel with R: doParallel, BiocParallel, and future (examples are provided for 2 parallel jobs and the first two packages).

External arguments can be passed to an R script. The basic method is described below but the package optparse provides ways to handle external arguments à la Python.

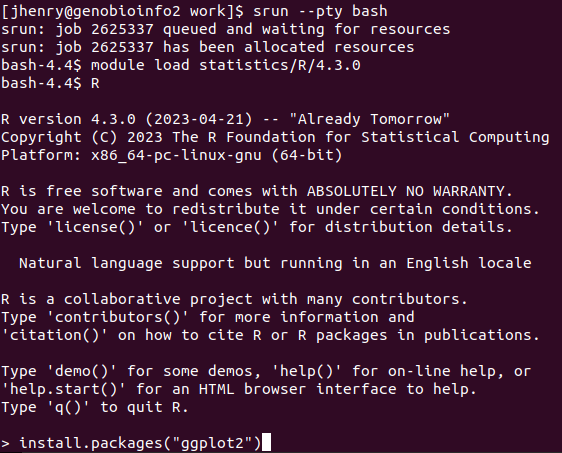

Once in an interactive R session, R packages are installed (in a personal library) using the standard install.packages command line.

Your personal library is usually located at the root of your personal directory whose allocated space is very limited. A simple solution consists in:

As a note, a few R packages are already installed inside an R version. For example, the package dplyr is already installed inside the module statistics/R/4.3.0. You can check if a package is pre-installed using the command search_R_package [PACKAGE_NAME]. In case you would like to have an additional package pre-installed in a given R version, you could request it here : https://bioinfo.genotoul.fr/index.php/ask-for/install-soft/.

To compile a .Rmd file, two packages are needed: rmarkdown and knitr. You also need to load the module tools/Pandoc/3.1.2.

As for an R script, you can pass external arguments to a .Rmd document.

---

title: My Document

output: html_document

params:

text: "Hi!"

---

What is your text?

'''{r}

print(params$text)

'''conda is a module on the cluster that gives you access to additional R versions. The following script creates a conda-R environment with R 4.2.0.